Upgrade Digital Access from 5.13.1 and above to 6.0.5 and above

This article is valid for upgrade from Digital Access 5.13.1 and above to 6.0.5 or above.

This article describes how to upgrade the Smart ID Digital Access component from version 5.13.x (includes 5.13.1, 5.13.2, 5.13.3, 5.13.4, 5.13.5) to 6.0.5 or above for single node appliance as well high availability or distributed setup.

Upgrades from 5.13.1 to 6.0.5 or above should not be done through virtual appliance or admin GUI. Follow the steps in this article for a successful upgrade.

Important

- Version 5.13.0 cannot be directly upgraded to 6.0.5 or above. You must upgrade to 5.13.1 or above, since 5.13.0 has Ubuntu 14.04, which is not a supported version for Docker.

- When upgrading from 5.13.1, or above, to 6.0.5 and above, the appliance OS is not upgraded. You must manually upgrade the OS. However, the recommended option is to provide your own Linux Virtual Machine (VM) instead. If you still want to upgrade the appliance, you need to follow the upgrade instructions for Upgrade from 5.13 to 6.0.2 first, and then Upgrade Digital Access component from 6.0.2 and above to 6.0.5.

You only need to perform these steps once to set the system to use docker and swarm. Once this is all set, future upgrades will become much easier.

There are two options, described below, for upgrading from version 5.13.1 and above to 6.0.5 and above:

- Migrate - This section describes how to upgrade, as well as migrate, the Digital Access instance from appliance to a new Virtual Machine by exporting all data/configuration files with the help of the script provided. (Recommended)

- Upgrade - This section describes how to upgrade Digital Access in the existing appliance to the newer versions.

Download latest updated scripts

Make sure you download the upgradeFrom5.13.tgz file again in case you have downloaded it before 29th October, 2021 to get the latest updated scripts.

Migrate Digital Access

- Make sure that you have the correct resources available (memory, CPU and hard disk) as per requirement on new machines.

- Install docker, xmlstarlet, although upgrade/migrate script will install docker and xmlstarlet if not installed already. But if it is an offline upgrade (no internet connection on machine) then install the latest version of docker and xmlstarlet before running the migration steps.

- The following ports shall be open to traffic to and from each Docker host participating on an overlay network:

- TCP port 2377 for cluster management communications.

- TCP and UDP port 7946 for communication among nodes.

- UDP port 4789 for overlay network traffic.

- For High Availability setup only,

- Make sure you have the similar set of machines, since the placement of services will be same as on the existing setup. For example, if you have two appliances in High Availability setup, you must have two new machines to migrate the setup.

- Identify the nodes, as the new setup should have equal number of machines. You must create mapping of machines from the old setup to the new setup.

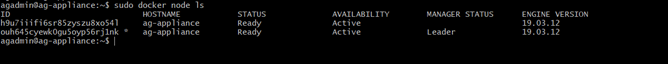

Identify the manager node. In docker swarm deployment, one machine is the manager node. Make sure that the administration-service node in the older setup replaces it during upgrade/migrate.

Upgrade Digital Access

Upgrade manager node

Upgrade worker nodes (Only applicable for High Availability or distributed setup)

Do final steps at manager node

Copyright 2024 Technology Nexus Secured Business Solutions AB. All rights reserved.

Contact Nexus | https://www.nexusgroup.com | Disclaimer | Terms & Conditions